The Magic Bracket

or how to entertain yourself and others

Land cards from the Unstable set. Full art lands are the most beautiful MTG cards for me.

TL;DR

Following an MTG Judge's proposal to hold a bracket tournament to find the best card in the game, I participated in the fun by providing (nearly) automated results, and eventually a website to view the progress of any card.

Finding a Project

Reddit is really an amazing place. You can find almost anything there - including fun programming projects to work on! I did so on my 20th birthday. As I was scrolling through the feed, I noticed that the Magic: The Gathering community was getting ready for the Magic Bracket : a 14-round, 16342-contestant tournament to determine the best card ever printed. And you thought March Madness was a huge tournament.

Now, a question you might have is - hey Ivan, I'm not a nerd like you, can you tell me what is Magic: The Gathering and why is the tournament so huge? Well, MTG is the first trading card game created, way before all those Pokemon and WoW card games. Roughly every year since 1993, the company Wizards of the Coast has been releasing multiple sets of cards, which led to over 16000 unique cards designed by 2016 and 19659 cards at the moment of writing this article.1 With such a large set of cards as well over 20 million estimated players in the world2, it makes sense that some cards became loved and even venerated. Thus, the tournament started to find the single best card.

And the tournament was huge! Each day for over a year, 32 matchups came out to vote on. Some people quickly burnt out on voting so much, day after day for months, but a core group of a couple thousand people stayed in almost through the two years of voting. I myself voted on only so many batches of matchups before I got tired - but I already started planning my participation in a different way

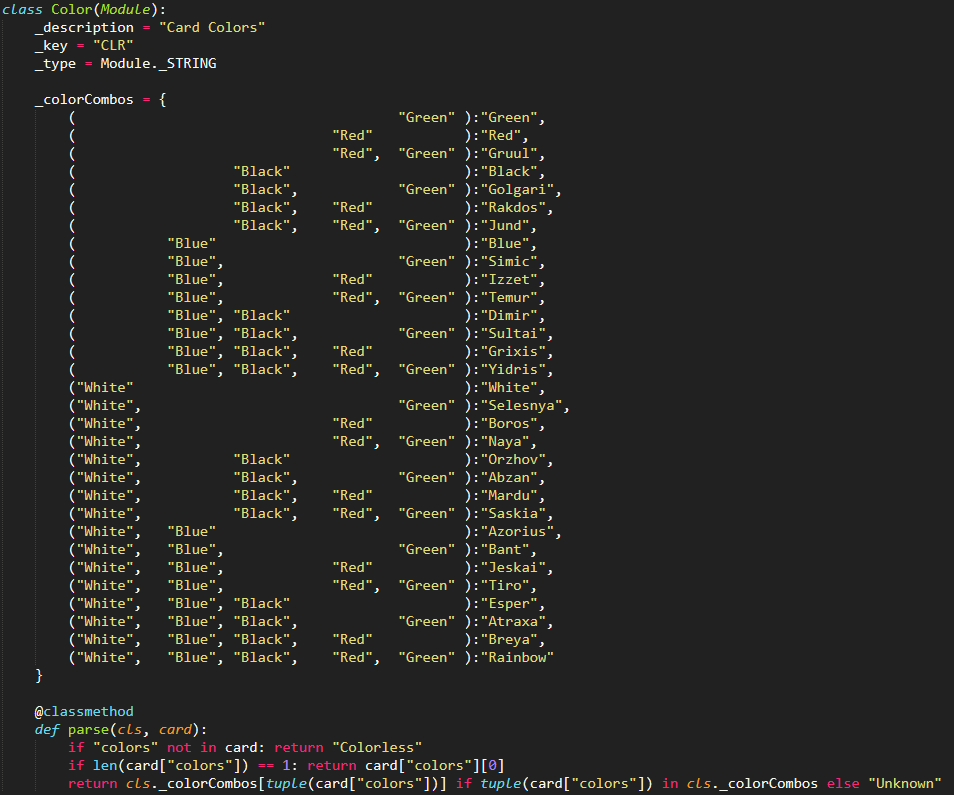

Quick peek at the Color modular class. You can tell the fantasy genre of the game just from the color names.

Coding for the Project

Around 2016, Big Data was getting more and more popular and here I was, participating in a tournament generating tons of data every day - so it was natural for me to think how I could apply my coding skills to have fun with the project. I've written a couple of Python lines to start with - open up a JSON file containing information on all the cards, read the tournament handler's nicely formatted results that I manually wrote in, collect some statistics and print them out on console. I'd then copy-paste that information into a comment and voila, now Reddit can see the statistics of each day of voting.

As the weeks went by, I got more and more code in. One of the first things I realized I needed to do was to have a saved copy of the results - and as such, I designed a small database-like class that would load up both the set of all cards as well as the set of all results from their JSON representation into a useful Python object set, and could save any modifications I do to the results. This added a new approach to results : I could now supply statistics over a week or even longer time frames.

Adding a modular system allowed me to collect different statistics by simply including the keyword for that statistic in a list. This included things like Converted Mana Cost, which is the price of playing a card; Colors, which represent the flavour of the card and its mechanics; as well as a couple more. Eventually, I even added the set of artists to these statistics, as each card has incredible art and some people (me included) voted on cards they didn't know depending on the art. I then applied the modular approach further, by setting up multiple ways of filtering which cards I wanted to get statistics of, as well as multiple outputs - so that I could see just the names of the cards in question, instead of a giant table of results.

One thing I never bothered with however was an automated way to fetch the results from Reddit and post my summaries. I did read up on common approaches, particularly Python's PRAW library, but I decided against it. One of the biggest challenges for me to implementing a bot was that I needed a server that could run a scheduled task with the bot's script - and I didn't have something like that. The closest thing I had was the McGill CS servers, and that's where the last and biggest part of my participation came into play.

Screenshot of the Bracket Database UI. Even Gods fell during this tournament...

Designing a Website

Between running to classes at 8.30 am, going to work, TAing, etc. I found that I never had enough time at home to generate the results. Since my work was in web development, I thought to just set up my parser online so I could access it anywhere - even from a phone! A lot of the inspiration for the UI design actually came from my web development work, and thus it became powered by Bootstrap, leading to pretty decent mobile and tablet views. Of course, the custom page where I manually put the text to parse was absolutely ugly - a single form without any CSS; no one except me saw it, so it didn't matter.

Finally, I wanted to be a bit different from other people having similar ideas, such as here and here [no HTTPS], so I changed what my website supplied to its users. Not only could it both show results for a specific batch or a specific card, it was re-engineered to have an exposed API. Theoretically, people could now use it to get results for their own reasons.

Conclusion

On November 1st, 2018, exactly two years after the first batch was set up, the project was done. The final was something most participants predicted - two of the most iconic and reprinted cards of MTG history faced off, and Lightning Bolt ended up winning. Since then, there hasn't been any progress made, although I do have a project in mind.

With today's hype in ML, it would be an interesting project to set up an "A vs B" voting website to collect the results and attempt to set up a model, so we could predict how would new cards be received by the players. With 20000 cards, almost 400 million unique pairings can be created: now that's some Big Data.