Introduction to the problem

Dialogue task: given a short conversation between two users, the goal is to generate what the next user will say.

Two types of dialogue

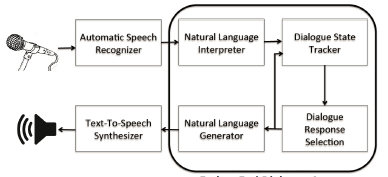

Task oriented

We have a notion of "goal" or "achievement" -- e.g. send a text message to someone

We know if the task was achieved; as a result of that, we can have a reward signal -- e.g. was the message sent or not?

Modular architecture:

Pros (compared to non-task oriented dialogue systems) | Cons | |

Easier to train | Restricted to the task-specific domain | |

Requires less data | Often requires significant human feature engineering | |

So far achieves better results | Doesn’t generalize to general-purpose dialogue |

Non-task oriented

No clear notion of goal, we just want to "discuss" some topic.

Since no clear task, very hard to have a "completion metric" or a reward signal (e.g. number of times the user interacts with our system?)

End-to-end architecture:

No need for pre-defined states or action space representation (learned during training).

Once the architecture is specified, all that is needed to converse in another domain is new training data.

Two types of models

Retrieval based

Return the "most likely" response from a database (i.e. train set) for a given conversation history.

+ Syntax very similar to human expectations, responses are almost never generic.

+ Easier to evaluate (recall@k).

- Domain limited by the training set.

- Responses often out of topic given that they also are very specific.

e.g. Dual Encoder - [1506.08909] The Ubuntu Dialogue Corpus: A Large Dataset for Research in Unstructured Multi-Turn Dialogue Systems

Generative based

Learns a distribution over the vocabulary size at each time step, language model kind of system.

+ Much more flexible: not restricted to a domain, but still restricted to a vocabulary.

+ Responses usually more "on topic" than a retrieval model.

- Hard to train, prone to generate generic responses (because generic responses can fit a wide variety of contexts).

- Very hard to evaluate: what is considered a "valid" response? Is it sufficient to measure word overlap with the ground truth?

e.g. Hierarchical Recurrent Encoder Decoder (HRED) - [1507.04808] Building End-To-End Dialogue Systems Using Generative Hierarchical Neural Network Models

Definitions

- A dialogue is formed of multiple turns between TWO users.

- A turn corresponds to one user saying something. The next turn is the other user saying something. Each turn is formed of one or more utterances. The end of turn tag in our corpus is "__eot__".

- An utterance is considered as one message a user sent. Note that in a chat platform, a user can send multiple consecutive messages before getting a reply. The end of utterance tag in our corpus is "__eou__".

Here's an example of a chat:

ummm ubuntu install got stucked at starting up the partion 45 % ... __eou__ why does it get stuck there ? __eou__ can somebody help me ? : ) __eou__ __eot__

is that in an installed ubuntu , or the livecd ? __eou__ did you md5 test the iso you transferred ? __eou__ __eot__

should i download from somewhere else ? __eou__ __eot__

try downloading it manually and md5 testing it yourself , then use unetbootin on that __eou__ __eot__

- A context is the beginning of a dialogue, cut after a random number of turns.

- A response is the next turn coming after the context. We always consider a response to be ONE turn.

The true response is considered to be the actual turn coming after the context in our data set.

We can now reformulate our task based on the above definitions: given a context, we want to predict a "valid" response.

What does "valid" mean? How do we correctly evaluate a dialogue system? Still an open question...

Ubuntu Corpus

Information based on this journal paper: D&D: Training End-to-End Dialogue Systems with the Ubuntu Dialogue Corpus | Lowe | Dialogue & Discourse

High-level description of dialogue types

- Collection of logs from Ubuntu-related chat rooms on the Freenode Internet Relay Chat (IRC) network. Each chat room (or channel) has a particular topic, usually used for obtaining technical support with various Ubuntu issues. Every channel participant can see all the messages posted on that channel.

- Most interactions follow a similar pattern: a new user joins the channel and asks a general question about some problem, another user replies with a potential solution, after first addressing the 'username' of the first user. This is called a name mention and is done to avoid confusion in the channel. There can be up to 20 simultaneous conversations happening at the same time in popular chat rooms.

- A conversation generally stops when the problem has been solved, though some users occasionally continue to discuss a topic not related to Ubuntu.

Creation of data set

- Extract dyadic dialogues:

- Convert every message into 4-tuples of (time, sender, recipient, utterance) with recipient defined as:

> if the first word of the utterance matches the username dictionary and is not a very common English word (GNU Aspell checking dictionary used), then it's a recipient.

> if no match is found, assumed to be an initial question, recipient value is left empty. - Group tuples where sender & recipient match:

> extraction algorithm works backward from the first response (utterance with a recipient) to find the initial question (most recent utterance by the recipient of the first response) within a time frame of 3 minutes.

> only consider dialogues of 3 turns or more.

> if multiple first responses from different users, consider them all as different dialogues (very rare compared to the size of the data set).

- Convert every message into 4-tuples of (time, sender, recipient, utterance) with recipient defined as:

Download

Downloaded from the official page: GitHub - rkadlec/ubuntu-ranking-dataset-creator: A script that creates train, valid and test datasets…

By default, the format of the data set is a list of 3-tuples: (context, response, flag) with flag being a Boolean (0/1) indicating whether the response is the actual next turn after the context. From this format, we simply create a list of valid dialogues by appending the response to the context for each tuple where the flag is 1, i.e.:

- (context, response, 1) --> add context+" "+response to the list of dialogues.

- (context, response, 0) --> skip this tuple. Note that even though the context could be considered as a valid dialogue by itself, we decided to ignore it since the same context will be present in another tuple with its matching response (when flag=1).

Tokenization & Named entities

- NLTK tokenization by adding `-t` flag in ubuntu-ranking-dataset-creator/generate.sh at master · rkadlec/ubuntu-ranking-dataset-creator · GitHub

We found that nltk tokenization alone wasn't enough for some cases. - Tweet tokenization by running ubottu/twokenize.py at master · npow/ubottu · GitHub

We also found that this script alone wasn't enough for some cases. - We applied both of these tokenizers and saw that it was too much for some cases (ex: " I'm " --> " I ' m "), so we decided to correct the following common abbreviations: 'm, 's, 't, 'd, 'll, 've.

- Named entities were not replaced by placeholders. Note that this makes the task harder than in the journal paper, where authors replaced person/location/organization/url/paths with generic placeholders.

Examples

dialogue that probably started earlier:

<<

and because Python gives Mark a woody __eou__ __eot__

i 'm not sure if we ' re meant to talk about that publically yet . __eou__ __eot__

and I thought we were a `` pants off '' kind of company ... : p __eou__ you need new glasses __eou__ __eot__

{...}

>>

dialogue with an out of context discussion:

<<

hey there .. long time __eou__ could n't make it to auug unfortunately , otherwise would have said hello in person __eou__ __eot__

hi ! oh well ... __eou__ __eot__

place I 'm working as is moving offices .. and of course , I 'm the poor sod that is organising the move __eou__ __eot__

{...}

>>

Turn containing URL:

<<

{...}

please add any additional information here : https : // bugzilla.no-name-yet.com/show_bug.cgi ? id=1171 __eou__ __eot__

{...}

>>

Turn containing command and email:

<<

could you please run sudo XFree86 : 42 -ac -logfile /tmp/xfree86-nv-1680x1050 . log -logverbose 999999999 ? __eou__ and email the output to daniel . stone @ canonical.com , cc'ing fabbione @ canonical.com __eou__ __eot__

>>

A system can do well on those URLs & commands only if the dialogue system has access to an external knowledge base.

Byte Pair Encoding (BPE)

- Language model architectures like an encoder-decoder type of model output a distribution over the space of possible tokens at each time step of their decoding phase. The raw training data, after tokenization, has a vocabulary size of ~500,000. Decoding from such a big vocabulary would take forever, so we applied Byte Pair Encoding on our data.

- Basic idea: given a corpus of text, it will find the most popular character n-grams and split words around those n-grams in order to reduce the vocabulary close to some "preferred" size (in our case preferred size of 5,000 resulted in 6,285 unique tokens).

The smallest vocabulary size we can get is simply the number of characters in the alphabet (26). In this case, we will train a character-level language model. - Paper - [1508.07909] Neural Machine Translation of Rare Words with Subword Units

- Code - GitHub - rsennrich/subword-nmt: Subword Neural Machine Translation

- Examples:

- Original:

<<dude , stop slagging off our weather . british weather is fun , it keeps you on your toes : ) __eou__ dude , you went home ! __eou__ __eot__ du de , yex __eou__ you ' re going to love it : ) __eou__ __eot__ there is another cd burning patch for rhythmbox ... __eou__>> - BPE:

<<<dude , stop sl@@ ag@@ ging off our we@@ a@@ ther . bri@@ ti@@ sh we@@ a@@ ther is fun , it keeps you on your to@@ es : ) __eou__ dude , you wen t home ! __eou__ __eot__ dude , ye@@ x __eou__ you ' re going to love it : ) __eou__ __eot__ there is another cd burning patch for rhythmbox .. . __eou__>>

- Original:

- To go from BPE to full words, we can remove all `@@ ` in a sentence.

Stats

All stats below are measured on the tokenized (nltk + tweet + correction) & byte-pair encoded data set:

- Data sizes:

Number of training dialogues

499,873

Number of validation dialogues

19,560

Number of test dialogues

18,920

Vocabulary size (after BPE target of 5000)

6,259

- Dialogue stats:

[train]

[val]

[test]

Dialogue length (in number of BPE tokens)

min: 9

max: 1497

avg: 120.632318609

var: 8373.34448223

min: 12

max: 1040

avg: 127.633588957

var: 9028.61374908min: 14

max: 1259

avg: 131.489217759

var: 9889.20516916Number of turns per dialogue

min: 3

max: 19

avg: 4.95178575358

var: 8.85263454607

min: 3

max: 19

avg: 4.79248466258

var: 7.7967635606min: 3

max: 19

avg: 4.84577167019

var: 8.13752440453Total number of turns

2,475,264

93,741

91,682

Number of unique turns

2,154,579

88,854

86,989

Turn length (in number of BPE tokens)

min: 2

max: 813

avg: 24.3613768067

var: 530.535639173

min: 2

max: 503

avg: 26.6320286748

var: 611.844072467min: 2

max: 1064

avg: 27.1348356275

var: 669.331091839Number of utterances per turn

min: 1

max: 63

avg: 1.5286050296

var: 1.4678089371min: 1

max: 24

avg: 1.50656596367

var: 1.2707695528min: 1

max: 40

avg: 1.52521759997

var: 1.37907328493

- Top 100 most frequent turns in the lowercased training set:

Turn

Number of occurrences

Number of unique previous turns

yes __eou__ __eot__

thanks __eou__ __eot__

no __eou__ __eot__

ok __eou__ __eot__

? __eou__ __eot__

thank you __eou__ __eot__

yeah __eou__ __eot__

yes . __eou__ __eot__

thx __eou__ __eot__

: ) __eou__ __eot__

thanks ! __eou__ __eot__

yep __eou__ __eot__

np __eou__ __eot__

nope __eou__ __eot__

how ? __eou__ __eot__

what ? __eou__ __eot__

thanks . __eou__ __eot__

why ? __eou__ __eot__

lol __eou__ __eot__

what do you mean ? __eou__ __eot__

thanks : ) __eou__ __eot__

ty __eou__ __eot__

yup __eou__ __eot__

sure __eou__ __eot__

ok thanks __eou__ __eot__

k __eou__ __eot__

how do i do that ? __eou__ __eot__

huh ? __eou__ __eot__

ok , thanks __eou__ __eot__

no problem __eou__ __eot__

no . __eou__ __eot__

cool __eou__ __eot__

hi __eou__ __eot__

okay __eou__ __eot__

correct __eou__ __eot__

what are you trying to do ? __eou__ __eot__

why not ? __eou__ __eot__

sorry __eou__ __eot__

thank you . __eou__ __eot__

ah __eou__ __eot__

oh __eou__ __eot__

thanx __eou__ __eot__

yea __eou__ __eot__

hmm __eou__ __eot__

i see __eou__ __eot__

^ __eou__ __eot__

heh __eou__ __eot__

i did __eou__ __eot__

? ? __eou__ __eot__

: ( __eou__ __eot__

cheers __eou__ __eot__

right __eou__ __eot__

yes ? __eou__ __eot__

ok . __eou__ __eot__

thank you ! __eou__ __eot__

^^ __eou__ __eot__

done __eou__ __eot__

; ) __eou__ __eot__

hehe __eou__ __eot__

np : ) __eou__ __eot__

hello __eou__ __eot__

where ? __eou__ __eot__

you ' re welcome __eou__ __eot__

indeed __eou__ __eot__

exactly __eou__ __eot__

really ? __eou__ __eot__

: d __eou__ __eot__

no idea __eou__ __eot__

: p __eou__ __eot__

what 's the problem ? __eou__ __eot__

i know __eou__ __eot__

yw __eou__ __eot__

any ideas ? __eou__ __eot__

thnx __eou__ __eot__

i do __eou__ __eot__

ok : ) __eou__ __eot__

true __eou__ __eot__

not really __eou__ __eot__

nothing __eou__ __eot__

oh ok __eou__ __eot__

nope . __eou__ __eot__

thank you : ) __eou__ __eot__

you ' re welcome . __eou__ __eot__

yes it is __eou__ __eot__

how so ? __eou__ __eot__

# ubuntu+1 __eou__ __eot__

: - ) __eou__ __eot__

sudo dpkg-reconfigure xserver-xorg __eou__ __eot__

yeah . __eou__ __eot__

of course __eou__ __eot__

? ? ? __eou__ __eot__

nice __eou__ __eot__

eh ? __eou__ __eot__

ya __eou__ __eot__

tnx __eou__ __eot__

thanks a lot __eou__ __eot__

anyone ? __eou__ __eot__

haha __eou__ __eot__

good luck __eou__ __eot__

it is __eou__ __eot__

11804

10028

3875

3813

2174

1726

1707

1596

1594

1576

1560

1468

1391

1323

1242

1231

1213

1111

1072

1036

909

888

870

854

817

708

700

656

528

525

522

492

457

453

445

436

416

412

408

405

388

383

380

377

340

337

331

320

320

309

301

299

297

295

295

293

285

283

282

278

275

272

271

268

258

255

254

254

253

242

233

231

225

211

210

210

209

202

199

196

194

193

192

187

183

182

181

181

180

179

179

178

178

178

176

175

174

174

166

166

10786

8814

3538

3479

1835

1591

1588

1502

1462

1290

1436

1361

979

1228

1058

1119

1111

999

847

967

836

818

781

773

768

644

663

606

496

433

485

438

231

416

413

363

365

376

392

360

348

351

351

309

316

311

261

295

294

255

277

271

250

272

282

273

270

220

236

211

109

240

209

241

239

226

214

241

216

226

214

171

181

195

191

197

190

182

179

177

178

179

147

172

164

179

152

161

163

160

170

159

158

168

167

167

7

144

148

153

- Top 100 most frequent turns in the lowercased validation set:

Turn

Number of occurrences

Number of unique previous turns

yes __eou__ __eot__

thanks __eou__ __eot__

ok __eou__ __eot__

no __eou__ __eot__

thanks ! __eou__ __eot__

? __eou__ __eot__

thank you __eou__ __eot__

yes . __eou__ __eot__

^ __eou__ __eot__

what ? __eou__ __eot__

nope __eou__ __eot__

thx __eou__ __eot__

thanks . __eou__ __eot__

why ? __eou__ __eot__

how ? __eou__ __eot__

ok thanks __eou__ __eot__

no . __eou__ __eot__

yeah __eou__ __eot__

yep __eou__ __eot__

: ) __eou__ __eot__

ty __eou__ __eot__

thanks : ) __eou__ __eot__

lol __eou__ __eot__

yup __eou__ __eot__

sure __eou__ __eot__

np __eou__ __eot__

12.04 __eou__ __eot__

how do i do that ? __eou__ __eot__

hi __eou__ __eot__

huh ? __eou__ __eot__

what are you trying to do ? __eou__ __eot__

what do you mean ? __eou__ __eot__

you ' re welcome __eou__ __eot__

lspci -nn | grep vga __eou__ __eot__

you ' re welcome . __eou__ __eot__

ok , thanks __eou__ __eot__

okay __eou__ __eot__

thank you . __eou__ __eot__

hello __eou__ __eot__

correct __eou__ __eot__

no problem __eou__ __eot__

: ( __eou__ __eot__

yea __eou__ __eot__

thank you ! __eou__ __eot__

why not ? __eou__ __eot__

anyone ? __eou__ __eot__

no problem . __eou__ __eot__

hmm __eou__ __eot__

what is the output of : lsb_release -sc __eou__ __eot__

cool __eou__ __eot__

ah __eou__ __eot__

sorry __eou__ __eot__

k __eou__ __eot__

; ) __eou__ __eot__

how do i do that __eou__ __eot__

: p __eou__ __eot__

both __eou__ __eot__

np : ) __eou__ __eot__

any ideas ? __eou__ __eot__

sweet __eou__ __eot__

how __eou__ __eot__

thnx __eou__ __eot__

sudo fdisk -l __eou__ __eot__

precise __eou__ __eot__

yes ? __eou__ __eot__

indeed __eou__ __eot__

good luck __eou__ __eot__

no idea __eou__ __eot__

thanx __eou__ __eot__

what video chip do you use ? __eou__ __eot__

^^ __eou__ __eot__

what version of ubuntu ? __eou__ __eot__

no worries __eou__ __eot__

exactly __eou__ __eot__

what is the output of : wget -o alsa-info . sh http : // www.alsa-project.org/alsa-info.sh & & chmod +x . /alsa-info . sh & & . /alsa-info . sh __eou__ __eot__

done __eou__ __eot__

# ubuntu-offtopic __eou__ __eot__

yes i am __eou__ __eot__

i know __eou__ __eot__

... __eou__ __eot__

i do n't know __eou__ __eot__

it is __eou__ __eot__

sudo apt-get -f install __eou__ __eot__

nice __eou__ __eot__

11.10 __eou__ __eot__

yep . __eou__ __eot__

thank you very much __eou__ __eot__

how so ? __eou__ __eot__

: d __eou__ __eot__

wrong channel __eou__ __eot__

true __eou__ __eot__

nothing __eou__ __eot__

oh ok __eou__ __eot__

how would i do that ? __eou__ __eot__

what version of ubuntu are you using ? __eou__ __eot__

what __eou__ __eot__

cool , thanks __eou__ __eot__

ubuntu 12.04 __eou__ __eot__

laptop ? __eou__ __eot__

ok . __eou__ __eot__

402

289

116

103

77

74

66

61

59

47

44

43

43

42

37

35

34

33

33

31

30

30

30

28

25

25

24

23

22

20

20

19

18

16

16

16

15

15

15

14

14

13

13

12

12

12

11

11

11

10

10

10

9

9

9

9

9

9

9

9

9

8

8

8

8

8

8

7

7

7

7

7

7

7

7

7

7

7

7

7

7

7

7

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

6

389

278

110

102

76

65

65

57

56

47

44

42

43

40

37

32

33

33

33

27

29

30

22

28

25

21

21

23

12

19

14

18

16

15

14

13

14

15

7

14

13

12

13

12

12

0

11

9

10

9

9

10

9

9

9

6

8

9

8

9

9

7

8

5

8

8

8

7

6

7

7

6

7

7

7

7

7

7

7

5

7

5

7

6

6

6

6

6

6

6

5

6

6

6

5

5

6

6

6

6

- Top 100 most frequent turns in the lowercased test set:

Turn

Number of occurrences

Number of unique previous turns

yes __eou__ __eot__

thanks __eou__ __eot__

no __eou__ __eot__

ok __eou__ __eot__

thank you __eou__ __eot__

yes . __eou__ __eot__

? __eou__ __eot__

thanks ! __eou__ __eot__

what do you mean ? __eou__ __eot__

why ? __eou__ __eot__

nope __eou__ __eot__

yeah __eou__ __eot__

thanks : ) __eou__ __eot__

^ __eou__ __eot__

sure __eou__ __eot__

what ? __eou__ __eot__

thanks . __eou__ __eot__

no . __eou__ __eot__

yep __eou__ __eot__

thx __eou__ __eot__

np __eou__ __eot__

ty __eou__ __eot__

how ? __eou__ __eot__

: ) __eou__ __eot__

yup __eou__ __eot__

lol __eou__ __eot__

ok thanks __eou__ __eot__

12.04 __eou__ __eot__

okay __eou__ __eot__

how do i do that ? __eou__ __eot__

correct __eou__ __eot__

k __eou__ __eot__

huh ? __eou__ __eot__

cool __eou__ __eot__

hi __eou__ __eot__

you ' re welcome . __eou__ __eot__

what video chip do you use ? __eou__ __eot__

12.10 __eou__ __eot__

ok , thanks __eou__ __eot__

thank you ! __eou__ __eot__

what exactly are you trying to do ? __eou__ __eot__

nope . __eou__ __eot__

what is the output of : lsb_release -sc __eou__ __eot__

i see __eou__ __eot__

you ' re welcome __eou__ __eot__

what are you trying to do ? __eou__ __eot__

what is that ? __eou__ __eot__

greetings __eou__ __eot__

why not ? __eou__ __eot__

hello __eou__ __eot__

how so ? __eou__ __eot__

precise __eou__ __eot__

? ? __eou__ __eot__

anyone ? __eou__ __eot__

; ) __eou__ __eot__

ah __eou__ __eot__

right __eou__ __eot__

no problem __eou__ __eot__

thank you . __eou__ __eot__

oh __eou__ __eot__

hmm __eou__ __eot__

i did __eou__ __eot__

^^ __eou__ __eot__

ok . __eou__ __eot__

yes ? __eou__ __eot__

# ubuntu+1 __eou__ __eot__

where ? __eou__ __eot__

exactly __eou__ __eot__

what you mean ? __eou__ __eot__

what version of ubuntu are you using ? __eou__ __eot__

yes it is __eou__ __eot__

good luck __eou__ __eot__

what ubuntu version ? __eou__ __eot__

how do you mean ? __eou__ __eot__

what is the output of : wget -o alsa-info . sh http : // www.alsa-project.org/alsa-info.sh & & chmod +x . /alsa-info . sh & & . /alsa-info . sh __eou__ __eot__

how __eou__ __eot__

no idea __eou__ __eot__

are there any bugs reported ? __eou__ __eot__

nice __eou__ __eot__

thank you : ) __eou__ __eot__

and ? __eou__ __eot__

any ideas ? __eou__ __eot__

what is the problem ? __eou__ __eot__

what version of ubuntu ? __eou__ __eot__

hm ? __eou__ __eot__

i know __eou__ __eot__

so ? __eou__ __eot__

sorry __eou__ __eot__

lspci -nn | grep vga __eou__ __eot__

you ' re welcome : ) __eou__ __eot__

yes sir __eou__ __eot__

it does __eou__ __eot__

yes i did __eou__ __eot__

kk __eou__ __eot__

not at all __eou__ __eot__

: ( __eou__ __eot__

ah ok __eou__ __eot__

yep . __eou__ __eot__

what version of ubuntu __eou__ __eot__

389

282

117

110

76

69

60

59

44

41

41

40

38

38

37

36

34

34

34

30

28

28

26

26

24

23

22

21

21

21

19

18

18

18

17

16

16

16

16

15

15

15

15

14

14

14

13

13

13

12

12

12

11

11

11

11

11

11

10

10

10

10

10

9

9

9

9

9

9

9

9

8

8

8

8

8

7

7

7

7

7

7

7

7

7

7

7

7

6

6

6

6

6

6

6

6

6

6

6

6

378

270

113

106

76

62

56

59

43

39

41

40

37

36

37

35

34

32

34

30

27

28

25

23

22

22

22

17

20

20

19

17

18

17

10

16

15

15

14

15

11

15

13

14

13

12

13

11

12

4

12

8

10

0

10

11

11

11

9

10

9

10

10

9

9

9

9

9

9

6

9

8

8

8

8

8

7

7

7

7

7

5

7

6

7

7

6

5

5

6

6

6

6

5

6

6

6

6

6

6

One interesting remark we can make here is that even though there are more than 2,000,000 unique turns, having around 11,000 "yes" turns, which represent ~0.5 % of the training set greatly influences the model toward producing generic turns.

Models

Previous Work

Dual Encoder

( as described in [1506.08909] The Ubuntu Dialogue Corpus: A Large Dataset for Research in Unstructured Multi-Turn Dialogue Systems )

Can be seen as a discriminator: for a given context, it tries to differentiate between true responses and false ones.

- Encode the context in an RNN to get a context vector c

- Encode the response in an RNN (usually it's the same network parameters as in step 1 to introduce some regularization) to get a response vector r

- Compute sigmoid(c.M.r) with M being a matrix to get a probability for that context-response pair.

Can also be seen as a retrieval model: consider a collection of possible responses (i.e. every turn in the training set).

For any given context we encode it (c), and encode all possible responses (r_1, r_2, r_3, ..., r_k).

We then return the response r_i that maximises sigmoid(c.M.r_i).

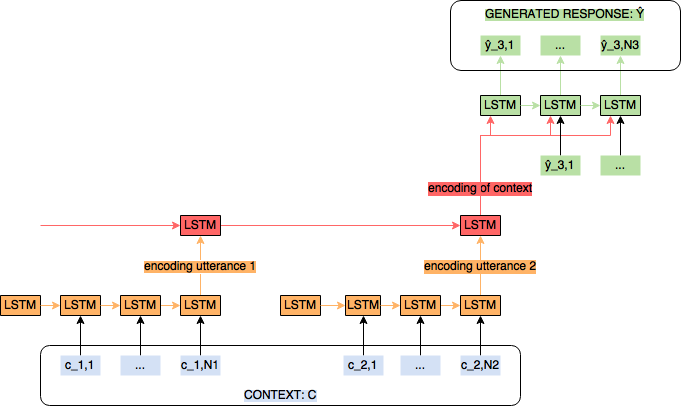

HRED (Hierarchical Recurrent Encoder Decoder)

( as described in [1507.04808] Building End-To-End Dialogue Systems Using Generative Hierarchical Neural Network Models )

This is a generative model which tries to learn the training data distribution in order to generate NEW responses:

- A context (or a dialogue history (i.e. a collection of turns between 2 users)) is encoded using a hierarchical RNN:

- The first layer is called "turn encoder". It encodes each turn of the context into a vector.

- The second layer is called "dialogue encoder" or "context encoder". Given all the turn encodings from the previous layer, it will encode them into a context vector.

- The context vector is passed to a decoder RNN ("turn decoder") that outputs a token distribution at each time step. We perform beam sampling from this distribution to create a new response. In our task, this new response is supposed to be the next turn a user could say after seeing the previous dialogue turns.

Note: as mentioned in the original paper, this model (which is trained using a maximum likelihood estimate objective) is prone to generating generic responses like "thanks", "i don't know", ...

New Ideas

Dual encoder + HRED

The original project idea I had was to help the decoder of the HRED model to generate less generic responses by giving it some retrieved responses. The process would be in two steps:

- Given a context, we retrieve the k most probable responses from the Dual Encoder system: (r_r1, r_r2, ..., r_rk).

- We encode the context with the hierarchical RNN to get vector c, concatenate the k retrieved responses: (c, r_r1, r_r2, ..., r_rk), and give this vector to the decoder instead of only giving it c.

However, we noticed after training a baseline HRED model that the decoder is essentially a language model that doesn't look at the context encoding very much. We think adding more information here will do nothing if the decoder is not paying attention to it.

Still, the code has been written to support this, but it hasn't been tested, so probably buggy at this stage.

Attention

We thus decided to improve the HRED model by adding an attention mechanism between the turn encoder and the context encoder, and another attention between the context encoder and the turn decoder.

Having an attention mechanism between two layers (say L1 below and L2 on top) will allow each hidden state in L2 to compute a weighted sum of all hidden states in L1, instead of only looking at the last hidden state in L1. We thus get the following architecture:

Note that the attention weights a depend on the hidden states (h_i) of the lower layer (L1) and on the hidden state we are currently looking at (h_t) in the upper layer (L2). We believe and hope that the network will learn to keep only what it finds interesting at time t, and filter useless information by having low value for a_t,i if h_i encodes something the network doesn't care about.

We define the new representation of the output from L1 to L2 to be: with

and with

At each time step t in L2, we thus compute c_t instead of simply looking at the last h_i from L1.

The attention mechanism used above is very simple in terms of architecture: we simply did a matrix multiplication between hidden states to compute the attention weights. More complex attention mechanisms could use feedforward neural networks to compute e_t,i.

Eventually, the formulas above have been used with (L1 = turn encoder & L2 = context encoder) and with (L1 = context encoder & L2 = turn decoder).

Trigrams

In order to further improve our model, we modified the beam sampling code that outputs tokens at each time step of the decoder. We noticed that generated responses were often repeating the same thing:

I 'm not sure what you mean , I 'm not sure what you mean , I 'm not sure what you mean . __eou__ __eot__

Taking inspiration from [1705.04304] A Deep Reinforced Model for Abstractive Summarization, we prevent the repetition of past trigrams in the same response. To do so we check at each generation step the past trigrams we generated and set the probability (coming from the decoder) of all tokens that will re-generate a past trigram to 0 to avoid sampling those tokens at this time step.

Downsampling of generic turns

Lastly, generic responses are a well-known problem in dialogue generation models. Multiple ideas have been proposed to deal with this issue.

One idea, coming from [1510.03055] A Diversity-Promoting Objective Function for Neural Conversation Models is to first generate responses with a large beam size (~200) according to their likelihood given a context, and then rerank them according to a mutual information metric that aims at maximizing both the likelihood of the context given the response ( Pr(context | response) ) and the likelihood of the response given the context ( Pr(response | context) ).

The problem we found is that even with a large beam size, our responses are still very generic, and their quality starts degrading with a beam size of 200. Thus, re-ranking those responses would not do any good since all of them are already quite bad.

To solve this issue, we decided to remove generic responses directly from the training set by doing the following:

- For each turn in each dialogue, we count the number of times they occur, and we count the number of unique previous turns they have. For instance, the turn "yes __eou__ __eot__" occurred 11,804 times in the training set and came after 10,786 unique previous turns. This means that this is a very generic turn. On the other hand, turns that occur a few times or that have only a few number of unique previous turns are considered to be specific.

- For each dialogue in the training set only:

- For each turn in the dialogue, we compute a probability of flagging this turn as generic:

Pr(generic turn) = 1 - (1 / number_of_unique_previous_turns )

we then flag that turn as generic with the above probability. - Eventually, we uniformly sample a turn previously flagged as generic and truncate our dialogue just before this turn.

- For each turn in the dialogue, we compute a probability of flagging this turn as generic:

It is important to note that when a dialogue contains several generic turns which are flagged for removal, we don't truncate the dialogue after the first flagged generic turn. We instead randomly select one of the flagged generic turns and truncate the dialogue before it. The resulting dialogue might still contain earlier turns which are generic. Always cutting our dialogue as soon as we see a generic turn would force us to ignore a lot of information in the dialogue and may cut a conversation too early to be useful.

The above solution has the advantage of reducing the amount of generic turns in the training set, while making sure we don't loose too much information and still have long enough dialogues to train our models.

Experiments

Disclaimer: the number of fine tuning experiments was limited due to GPU availability and long training time.

HRED baseline + avoid repeating trigrams

The first set of experiments we conducted were using the baseline HRED model (without attention) and the "sample trick" of avoiding repeating trigrams in a generated turn.

- NOTE: the training set was reduced to only the first 200,000 dialogues instead of considering the full data set (of size 499,873). This was done to reduce the GPU load and speed up the learning. We didn't notice any difference in terms of the quality of the generated turns; the model was still able to learn how to properly write English text.

- We didn't consider dialogues with less than 3 BPE tokens (including the '__eou__' and '__eot__' tags) or more than 350 BPE tokens.

- The learning optimizer used was Adam ([1412.6980] Adam: A Method for Stochastic Optimization ) with default parameters.

- The RNN networks ('turn encoder', 'context encoder', and 'turn decoder') were unidirectional networks.

- The two encoders are using GRU hidden units and the turn decoder is using LSTM hidden units.

- Batch size was reduced to 40 in order to avoid GPU memory overload.

- BPE embedding size was set to 200, and initialized to random float between -0.25 and +0.25 and learned during training. Note that the embeddings are shared between encoders and decoder. It may be worth trying to pre-train BPE embeddings on the corpus after it was processed to BPE tokens.

- We generated responses using beam search with a beam size of 1 and 5.

- We did 15 experiments with the above parameters fixed, and the following combination of parameter values:

- 5 learning rates: 0.0002 , 0.00265, 0.0051 , 0.00755, 0.01

- 3 set of hidden sizes: "exp1" (200, 300, 200) , "exp2" (300, 400, 300) , "exp3" (400, 500, 400) for (turn encoder, context encoder, turn decoder) respectively.

HRED with attention + avoid repeating trigrams

In the second set of experiments, we used HRED with attention (as described previously), and the "sample trick" of avoiding repeating trigrams in a generated turn.

- We used the same parameters as the previous set of experiments, only we did 9 experiments with the first 3 lower learning rates as they yielded better results (in terms of learning cost), so the parameters explored were:

- 3 learning rates: 0.0002 , 0.00265, 0.0051

- 3 set of hidden sizes: "att1" (200, 300, 200) , "att2" (300, 400, 300) , "att3" (400, 500, 400) for (turn encoder, context encoder, turn decoder) respectively.

HRED with attention + avoid repeating trigrams + lowercase data + downsampling generic responses

Eventually, the last set of experiments we did was with HRED with attention, the "sample trick" of avoiding repeating trigrams in a generate turn, lowercased data, and after removing generic turns from the training data as described previously.

- In order to compare with the previous experiments, we did 3 experiments with the same setup as before, only using the smaller learning rate as it was always better in terms of learning cost:

- 1 learning rate: 0.0002

- 3 sets of hidden sizes: "att1" (200, 300, 200) , "att2" (300, 400, 300) , "att3" (400, 500, 400) for (turn encoder, context encoder, turn decoder) respectively.

- We then explored different setups. Again with a learning rate of 0.0002, but this time having a bi-directional GRU for the turn encoder, we explored the following encoding sizes:

- "att11" (300, 500, 600, 500) , "att12" (300, 600, 700, 600) , "att13" (300, 700, 800, 700) , "att14" (400, 800, 800, 1000) , "att15" (400, 1000, 100, 1500) , "att16" (400, 1000, 1000, 2000) for (BPE token embedding, turn encoder, context encoder, turn decoder) respectively.

Results

Metrics

Here we describe the metrics we used to evaluate our models

Log likelihood

This is the score our network is trained to maximize. At each step in the decoder network, we compute a softmax over the vocabulary size and want the probability of the actual next turn BPE tokens to be maximized. The cost of the network is thus the negative log likelihood.

Perplexity

In our experiments the perplexity was calculated with the following formula :![]()

The lower the perplexity, the better our model is as it measures how well the model can predict the correct next turn.

Recall

One type of metric we used is a retrieval-based metric. We consider the task of selecting the correct response from a candidate list and evaluate our model using the metric of Recall@k. (See [1605.05414] On the Evaluation of Dialogue Systems with Next Utterance Classification ; and [1506.08909] The Ubuntu Dialogue Corpus: A Large Dataset for Research in Unstructured Multi-Turn Dialogue Systems where it is used.)

The agent is asked to select the k most likely responses from a candidate list, and it is considered to be correct if the true response is among those k candidates. This metric has useful properties:

- The performance (i.e. loss or error) is easy to compute automatically.

- It is simple to adjust the difficulty of the task (lower values of k increase the difficulty of the task).

- The task is interpretable and amenable to comparison with human performance.

We created a list of 10 possible responses for each context in each of the train, validation, and test partitions. From a dialogue consisting of multiple turns, we consider the last turn as the 'true response', all the previous turns as the 'context' and we randomly sample 9 other turns from the data set to create our list of candidate responses.

In order to select the k most likely responses (out of 10), we feed the context to the network and rank each response by computing their probabilities according to the decoder output. If the true response is in the top k, the model is deemed to be correct.

We measured the model accuracy on recall@1, recall@2, and recall@5. Note a random model would perform with accuracy 0.1, 0.2, and 0.5 respectively.

This evaluation metric presents the following drawbacks:

- We only evaluate our model on the last turn of a dialogue.

- Overall, this is an unrealistic metric as it doesn't mimic a production system where the list of possible responses would be much more than 10.

- As mentioned above, this is a metric designed for retrieval-based systems. It is not well suited for the evaluation of generative models which can generate valid responses different from the gold truth response extracted from the data set.

Embedding based metrics

Furthermore, we consider embedding based metrics where we compute the similarity between a generated response and the gold truth response. We do so by encoding the two responses according to their word embeddings and measuring the cosine similarity between the two. We noticed that BPE token embeddings don't make sense in evaluation since they only capture partial information about a word while full word embeddings are a more comprehensive representation of a word. Indeed, the conditional probability distribution of two BPE tokens is not the same as the conditional probability of two words. For example, if we see "exact@@ ", the next token will very likely be "ly" to form the adverb "exactly". On the other hand, if we work with full words and see "exact", the next word could be a lot of things.

We thus consider full words for the generated and the gold truth responses (we can convert BPE responses by replacing all occurrences of "@@ " by nothing). We decided to use the pre-trained Word2Vec embeddings from Google, as well as the pre-trained word embeddings from the Dual Encoder model described previously. Note that no fine-tuning has been done in the dual encoder task, so the embeddings might not be optimal and could be further improved. However, we still wanted to use those embeddings as well since they were trained on the Ubuntu corpus, unlike word2vec embeddings.

As described in [1603.08023] How NOT To Evaluate Your Dialogue System: … , we consider three different embedding-based metrics:

Average embedding (AE)

The embedding average (e_r) is defined as the mean of the word embeddings of each token (e_w) in a sentence r:

To compare a ground truth response r and a generated response r' , we compute the cosine similarity between their respective sentence level embeddings:![]()

Greedy matching (GM)

Greedy matching is the one embedding-based metric that does not compute sentence-level embeddings. Instead, given two sequences r and r' , each token w ∈ r is greedily

matched with a token w' ∈ r' based on the cosine similarity of their word embeddings (e_w), and the total score is then averaged across all words:

This formula is asymmetric, thus we must average the greedy matching scores G in each direction:

The greedy approach favors responses with keywords that are semantically similar to those in the ground truth response.

Vector extrema (VE)

For each dimension d of the word embeddings, we take the most extreme value among all word embeddings in the sentence, and use that value in the sentence-level embedding:

where d indexes the dimensions of a vector; e_wd is the dth dimension of e_w (w’s embedding). The similarity between response vectors is computed using cosine distance just like in the average embedding case.

Intuitively, this approach prioritizes informative words over common ones; words that appear in similar contexts will be close together in the vector space. Thus, common words are pulled towards the origin because they occur in various contexts, while words carrying important semantic information will lie further away. By taking the extreme along each dimension, we are thus more likely to ignore common words.

We compute the average score of these three metrics on a collection of gold truth & generated responses where the context to each response is the cumulative turns in a dialogue. For example if our dialogue is (turn_1, turn_2, turn_3, turn_4), we feed to our network the following contexts: (turn_1), (turn_1, turn_2), (turn_1, turn_2, turn_3) to generate resp_2, resp_3, resp_4 and compute their score based on the ground truth responses: turn_2, turn_3, and turn_4.

Compared to word-overlap metrics such as BLEU, ROUGE and METEOR scores, we believe that metrics based on distributed sentence representations hold the most promise for the future. This is because word-overlap metrics will simply require too many ground-truth responses to find a significant match for a reasonable response due to the high diversity of dialogue responses.

Note that in each of the embedding metrics, the structure of the sentence is completely lost. For example, if the ground truth response was "I like red apples but not green apples" but the generated response was "I like green apples but not red apples", the embedding score would be 1 even though the two sentences mean the exact opposite.

Since those metrics only consist of basic averages of vectors obtained through distributional semantics, they are insufficiently complex for modeling sentence-level compositionality in a dialogue. Instead, these metrics can be interpreted as calculating the topicality of a proposed response (i.e. how on-topic the proposed response is, compared to the ground-truth).

Human Evaluation

Since none of the above metrics are perfect, we also considered a human evaluation round. We asked several Nuance researchers in the field of NLU to rate responses. We sampled 200 random contexts, and 5 responses for each of them. The responses were coming from the following models:

- Randomly sampled human response.

- Gold truth (human) response.

- Response generated by one model in the 1st set of experiments: hred baseline

- Response generated by the same model in the 2nd set of experiments: hred with attention

- Response generated by the same model in the 3rd set of experiments: hred with attention + lowercased data + removed generic turns

We asked researchers to give a score between 1 (poor response) and 5 (very good response) to each response for each context, not knowing where the response was coming from. Researchers were asked to score each response independently of the other responses (i.e. they were asked to score the response based only on how appropriate it is given the context).

The 200 samples were split into four groups of 50 samples each. Each group was analyzed by 3 different researchers.

Results

Here we present results for the three sets of experiments previously described with the aforementioned metrics.

Recall and Embedding metrics

- HRED baseline results on test set, avoid repeating past trigrams, with the smaller learning rate and the 3 different encoding sizes:

Word2Vec embeddings:Dual Encoder embeddings:TEST - turn contexts TEST (BEAM1 | BEAM5) test cost test perplexity Recall 1@10 Recall 2@10 Recall 5@10 Embedding Avg Greedy Matching Vector Extrema exp01 3.9524 52.0578 exp02 3.9399 51.4129 21.982 35.206 66.195 0.575 | 0.558 0.438 | 0.430 0.317 | 0.318 exp03 3.9455 51.7045 22.511 35.719 66.401 0.582 | 0.561 0.444 | 0.433 0.322 | 0.323 exp01 3.9524 52.0578 exp02 3.9399 51.4129 21.982 35.206 66.195 0.624 | 0.591 0.578 | 0.582 0.372 | 0.364 exp03 3.9455 51.7045 22.511 35.719 66.401 0.628 | 0.593 0.579 | 0.581 0.374 | 0.363

We can see already that the best model according to the perplexity is not the best model according to the recall and embedding metrics.

- HRED with attention results on test set, avoid repeating past trigrams, with the smaller learning rates and the 3 different encoding sizes:

Word2Vec embeddings:Dual Encoder embeddings:att01 2.6663 14.3872 att02 2.6587 14.2781 21.152 35.291 66.263 0.528 | 0.517 0.405 | 0.396 0.296 | 0.297 att03 2.5746 13.1267 19.070 32.104 63.277 0.515 | 0.500 0.395 | 0.387 0.293 | 0.294 att01 2.6663 14.3872 att02 2.6587 14.2781 21.152 35.291 66.263 0.569 | 0.541 0.556 | 0.563 0.340 | 0.331 att03 2.5746 13.1267 19.070 32.104 63.277 0.548 | 0.517 0.565 | 0.567 0.328 | 0.317

We can see that having the attention mechanism greatly reduces the perplexity, but doesn't help the model to perform better in terms of recall and embedding metrics.

- HRED with attention, avoid repeating past trigrams, with the smaller learning rates, different encoding sizes, lower-cased data, and generic turns removed:

Evaluation on test set with Word2Vec embeddings:Evaluation on test set with Dual Encoder embeddings:TEST TEST - turn contexts (BEAM1 | BEAM5) test cost test perplexity Recall 1@10 Recall 2@10 Recall 5@10 Embedding Avg Greedy Matching Vector Extrema att03 2.5682 13.0423 18.821 32.077 63.552 0.551 | 0.536 0.414 | 0.404 0.307 | 0.306 att15 2.5345 12.6101 19.725 33.531 64.889 0.370 | 0.358 0.312 | 0.301 0.246 | 0.239 att16 2.5732 13.1077 16.686 29.725 60.909 0.488 | 0.4667 0.374 | 0.360 0.278 | 0.273

att03 2.5682 13.0423 18.821 32.077 63.552 0.596 | 0.573 0.563 | 0.568 0.353 | 0.347 att15 2.5345 12.6101 19.725 33.531 64.889 0.395 | 0.376 0.567 | 0.563 0.265 | 0.254 att16 2.5732 13.1077 16.686 29.725 60.909 0.523 | 0.498 0.563 | 0.562 0.3175| 0.304

We can see that removing generic turns only slightly reduces the perplexity. We note also that larger models can produce better results (att15, att16).

Surprisingly, the best result is achieved when the context encoder has only 100 hidden units ("att15"). This can be explained if we consider that the context encoding is actually not good enough for the decoder, so having a small signal reduces the "noise" given to the decoder.

Samples

- Here we take a few contexts and generate the responses with an N-best of 5 with a model from all 3 sets of experiments:

Context Gold Truth response Beam5 responses for hred + avoid trigrams Beam5 responses for hred with attention + avoid trigrams Beam5 responses for hred with attention + avoid trigrams + downsampling of generic turns anyone knows why my stock oneiric exports env var ' USERNAME ' ? I mean what is that used for ? I know of $ USER but not $ USERNAME . My precise install does n't export USERNAME __eou__ __eot__ looks like it used to be exported by lightdm , but the line had the comment `` // FIXME : Is this required ? '' so I guess it is n't surprising it is gone __eou__ __eot__ 1) I do n't know , sorry . __eou__ __eot__

2) I do n't think you need to do that . __eou__ __eot__

3) I do n't know , but I 've never used it . __eou__ __eot__

4) I do n't think you need to do that __eou__ __eot__

5) I do n't know , sorry __eou__ __eot__1) you need to edit /etc/modprob e.d __eou__ __eot__

2) you need to edit /etc/modprob e.d and /etc/mtab __eou__ __eot__

3) you need to add a line to /etc/modules __eou__ __eot__

4) you need to edit /etc/modules . conf __eou__ __eot__

5) you need to edit /etc/modules __eou__ __eot__1) you can change the owner of the file __eou__ __eot__

2) i think you can do that with ls -l __eou__ __eot__

3) you can change the owner of the file , but it does n't do it __eou__ __eot__

4) you can change the owner of the file , but it does n't do anything __eou__ __eot__

5) you can change the owner of the file , but it does n't matter __eou__ __eot__anyone knows why my stock oneiric exports env var ' USERNAME ' ? I mean what is that used for ? I know of $ USER but not $ USERNAME . My precise install does n't export USERNAME __eou__ __eot__

looks like it used to be exported by lightdm , but the line had the comment `` // FIXME : Is this required ? '' so I guess it is n't surprising it is gone __eou__ __eot__thanks ! How the heck did you figure that out ? __eou__ __eot__ 1) I do n't know how to do that , but I 'm not sure what you mean . __eou__ __eot__

2) I do n't know how to do that , but I 'm not sure what to do with it . __eou__ __eot_

3) I do n't know how to do that , but I 'm not sure what to do with it __eou__ __eot__

4) I do n't know how to do that , but I 'm not sure what to do . __eou__ __eot__

5) I do n't know how to do that , but I 'm not sure what to do __eou__ __eot__1) I ' m not sure how to do that , but I do n't know how to get it to work __eou__ __eot__

2) I ' m not sure how to do that , but I 'm not sure what to do with it . __eou__ __eot__

3) I ' m not sure how to do that , but I do n't know how to fix it __eou__ __eot__

4) I ' m not sure how to do that , but I do n't know how to get it to work . __eou__ __eot__

5) I ' m not sure how to do that , but I do n't know how to get it to work . __eou__ __eot__1) thanks , i 'll give it a try __eou__ __eot__

2) thanks , i 'll give it a try . __eou__ __eot__

3) thanks , i 'll give it a try , thanks __eou__ __eot__

4) thanks , i 'll give it a try . i 'll try that . __eou__ __eot__

5) thanks , i 'll give it a try . i 'll try it out __eou__ __eot__anyone knows why my stock oneiric exports env var ' USERNAME ' ? I mean what is that used for ? I know of $ USER but not $ USERNAME . My precise install does n't export USERNAME __eou__ __eot__

looks like it used to be exported by lightdm , but the line had the comment `` // FIXME : Is this required ? '' so I guess it is n't surprising it is gone __eou__ __eot__

thanks ! How the heck did you figure that out ? __eou__ __eot__https : // bugs.launchpad.net/lightdm/+bug/864109/comments/3 __eou__ __eot__ 1) I do n't know how to do that , but I 'm not sure what you ' re trying to do . __eou__ __eot__

2) I do n't know how to do that , but I 'm not sure what you ' re trying to do __eou__ __eot__

3) I do n't know how to do that , but I 'm not sure what you ' re trying to do ... __eou__ __eot__

4) I do n't know how to do that , but I 'm not sure what you ' re doing . __eou__ __eot__

5) I do n't know how to do that , but I 'm not sure what you mean . __eou__ __eot__1) http : // www.debian.org/doc/manuals/reference/ch-tips.en.html # s-no-x-start __eou__ __eot__

2) http : // www.debian.org/doc/manuals/apt-howto/index.en.html __eou__ __eot__

3) http : // www.d ebian.org/doc/manuals/reference/ch-titups.en.html # s-no-root __eou__ __eot__

4) http : // www.debian.org/doc/manuals/reference/ch-tips.en.html # s-no-root __eou__ __eot__

5) http : // www.debian.org/doc/manuals/reference/ch-tips.en.html # s-no-root __eou__ __eot__1) i do n't know what you ' re talking about . __eou__ __eot__

2) i do n't know what you ' re talking about __eou__ __eot__

3) i do n't know what you ' re talking about , but i 'm not sure h ow to do that . __eou__ __eot__

4) i do n't know what you ' re talking about , but i 'm not sure what you mean . __eou__ __eot_

5) i do n't know what you ' re talking about , but i 'm not sure how to do that __eou__ __eot__im trying to use ubuntu on my macbook pro retina __eou__ i read in the forums that ubuntu has a apple version now ? __eou__ __eot__ not that ive ever heard of .. normal ubutnu should work on an intel based mac . there is the PPC version also . __eou__ you want total control ? or what are you wanting exactly ? __eou__ __eot__ 1) I do n't think you can . __eou__ __eot__

2) I do n't think so . __eou__ __eot__

3) I do n't think so __eou__ __eot__

4) I do n't think you can __eou__ __eot__

5) yes __eou__ __eot__1) yes __eou__ __eot__

2) yep __eou__ __eot__

3) yup __eou__ __eot__

4) no __eou__ __eot__

5) yeah __eou__ __eot__1) there is a ppc version of ubuntu __eou__ __eot__

2) there is a ppc version __eou__ __eot__

3) there is a ppc version of linux __eou__ __eot__

4) i have it installed __eou__ __eot__

5) there is a linux version __eou__ __eot__I just added a second usb printer but not sure what the uri should read - can anyone help with usb printers ? __eou__ __eot__

firefox localhost : 631 __eou__ __eot__firefox ? __eou__ __eot__ 1) does it have to do with it ? __eou__ __eot__

2) does it have to do with the printer ? __eou__ __eot__

3) yes , but it does n't work for me __eou__ __eot__

4) yes , but it does n't show up on the network __eou__ __eot__

5) yes , but it does n't show up in the browser __eou__ __eot__1) thanks __eou__ __eot__

2) thanks : ) __eou__ __eot__

3) thanks ! __eou__ __eot__

4) thanks for the help __eou__ __eot__

5) thanks for the tip __eou__ __eot__1) thanks __eou__ __eot__

2) thanks i will try that __eou__ __eot__

3) thanks , i 'll try that __eou__ __eot__

4) thanks for the help __eou__ __eot__

5) thanks for the link __eou__ __eot__I know I 'm probably doing something stupid here , but I ca n't figure out how to install ubuntu to sdb . All the installer shows is sda . gparted can see sdb __eou__ Any ideas ? __eou__ __eot__

use the ' somthign else/custome ' option and make your partitions on sdb as you want . ie : sdb1 = / sdb2 = /home/ sdb3 = swap . __eou__ __eot__

Yeah when I choose `` something else '' I 'm not seeing sdb in there either . I 'm not sure why __eou__ __eot__You can partion the HD with gparted from the live cd , then start the installer .. perhaps . __eou__ thats how i tend to do it . __eou__ The installers partion manager tool is a bit . annoying . __eou__ I also noticed the installer did not have a ' Install to a specific drive using the whold drive ' option .. __eou__ sort of annoying it will automate other things .. but not a fairly common case of a Seperate HD Just for linux . __eou__ __eot__ 1) I do n't think so , but it 's not a good idea . __eou__ __eot__

2) I do n't think so , but it 's not a good idea , but I 'm not sure what you mean . __eou__ __eot__

3) I do n't think so , but it 's not a good idea . I 've never used it . __eou__ __eot__

4) I do n't think so , but it 's not a good idea , but I 'm not sure . __eou__ __eot__

5) I do n't think so , but it 's not a good idea __eou__ __eot__1) I ' m not sure what you ' re talking about , but I do n't know how to do that . __eou__ __eot__

2) do you have a swap partition ? __eou__ __eot__

3) I ' m not sure what you ' re talking about . I do n't know how to do that . __eou__ __eot__

4) I ' m not sure what you ' re talking about , but I do n't know how to fix it . __eou__ __eot__

5) I ' m not sure what you ' re talking about , but I do n't know how to fix it . __eou__ __eot__1) i do n't know what you ' re talking about . __eou__ __eot__

2) i do n't know what you ' re trying to do , but i 'm not sure what you mean . __eou__ __eot__

3) i do n't know what you ' re talking about , but i 'm not sure what you mean . __eou__ __eot__

4) i do n't know what you ' re trying to do , but i 'm not sure if it 's possible . __eou__ __eot__

5) i do n't know what you ' re trying to do , but i 'm not sure how to do it . __eou__ __eot__

We can see that removing generic turns slightly improves the generated responses since they seem to be less "generic".

We also see that overall, the model is quite generic in its generated turns, and is not resourceful at all.

- Here we take one context and generate the responses with an N-best of 200 with a model from the second set of experiments (hred with attention) just to see the range of responses we can get by increasing the beam size:

Context

Gold Truth response

Beam200 responses for hred with attention + avoid trigrams

anyone knows why my stock oneiric exports env var ' USERNAME ' ? I mean what is that used for ? I know of $ USER but not $ USERNAME . My precise install does n't export USERNAME __eou__ __eot__

looks like it used to be exported by lightdm , but the line had the comment `` // FIXME : Is this required ? '' so I guess it is n't surprising it is gone __eou__ __eot__

thanks ! How the heck did you figure that out ? __eou__ __eot__

1) yeah , that 's what I was looking for : http : // cdimage.ubuntu.com/releases/dapper/release/ __eou__ __eot__

2) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=15 __eou__ __eot__

3) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=16 __eou__ __eot__

4) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=1724 __eou__ __eot__

5) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=17 __eou__ __eot__

6) yeah , that 's what I was looking for : http : // cdimage.ubuntu.com/releases/gutsy/release/ __eou__ __eot__

7) yeah , that 's what I was looking for : http : // www.debian.org/doc/manuals/apt-howto/index.en.html __eou__ __eot__

8) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=20 __eou__ __eot__

9) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=24 __eou__ __eot__

10) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=221 __eou__ __eot__

11) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=222 __eou__ __eot__

12) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=25 __eou__ __eot__

13) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=30 __eou__ __eot__

14) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? id=13 __eou__ __eot__

15) that ' s what I was looking for : http : // www.debian.org/doc/manuals/apt-howto/index.en.html __eou__ __eot__

16) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi ? __eou__ __eot__

17) yeah , that 's what I was looking for : http : // cdimage.ubuntu.com/releases/dapper/released/ __eou__ __eot__

18) yeah , that 's what I was looking for : http : // doc.gwos.org/index.php/Listen __eou__ __eot__

19) yeah , that 's what I was looking for : http : // ubuntuforums.org/archive/index.php/t-25695.html __eou__ __eot__

20) yeah , that 's what I was looking for : http : // ubuntuforums.org/archive/index.php/t-2569.html __eou__ __eot__

21) yeah , that 's what I was looking for : http : // ubuntuforums.org/archive/index.php/t-584.html __eou__ __eot__

22) yeah , that 's what I was looking for : http : // doc.gwos.org/index.php/Change __eou__ __eot__

23) yeah , that 's what I was looking for : http : // en.wikipedia.org/wiki/RootSudo __eou__ __eot__

24) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cgi __eou__ __eot__

25) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/128 __eou__ __eot__

26) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/969 __eou__ __eot__

27) yeah , that 's what I was looking for : http : // en.wikipedia.org/wiki/Listen __eou__ __eot__

28) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/4965 __eou__ __eot__

29) yeah , that 's what I was looking for : http : // doc.gwos.org/index.php/List __eou__ __eot__

30) yeah , that 's what I was looking for : http : // ubuntuforums.org/archive/index.php/t-57.html __eou__ __eot__

31) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/440 __eou__ __eot__

32) yeah , that 's what I was looking for : http : // ubuntuforums.org/archive/index.php/t-58.html __eou__ __eot__

33) yeah , that 's what I was looking for : http : // doc.gwos.org/index.php/Page __eou__ __eot__

34) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/48 __eou__ __eot__ yeah , that 's what I was looking for : http : // www.debian.org/doc/manuals/index.php __eou__ __eot__

35) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/38 __eou__ __eot__

36) yeah , that 's what I was looking for : http : // www.debian.org/doc/manuals/index.html __eou__ __eot__

37) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/show_bug.cfi __eou__ __eot__

38) yeah , that 's what I was looking for : http : // gentoo-wiki.com/HOWTO/ __eou__ __eot__

39) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/509 __eou__ __eot__

40) yeah , that 's what I was looking for : http : // en.wikipedia.org/wiki/Properties __eou__ __eot__

41) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/965 __eou__ __eot__

42) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/4969 __eou__ __eot__

43) yeah , that 's what I was looking for : http : // en.wikipedia.org/wiki/Apache __eou__ __eot__

44) yeah , that 's what I was looking for : http : // en.wikipedia.org/wiki/Search __eou__ __eot__

45) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/4239 __eou__ __eot__

46) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/2568 __eou__ __eot__

47) yeah , that 's what I was looking for : http : // bugzilla.sourceforge.net/ __eou__ __eot__

48) yeah , that 's what I was looking for : http : //paste . ubuntulinux . nl/188 __eou__ __eot__

49) yeah , that 's what I was looking for : ) __eou__ __eot__

50) I do n't know how to do that , but I 'm not sure what you ' re talking about __eou__ __eot__

51) yeah , that 's what I was looking for : http : // www.sysresccd.org/ __eou__ __eot__

52) yeah , that 's what I was looking for : http : // en.wikipedia.org/wiki/Ubuntu __eou__ __eot__

53) thanks , I 'll give it a shot __eou__ __eot__

54) yeah , that 's what I was looking for , thanks : ) __eou__ __eot__

55) thanks , I 'll check it out . __eou__ __eot__

56) thanks , I 'll give it a shot . __eou__ __eot__

57) thanks , I 'll check it out : ) __eou__ __eot__

58) yeah , that 's what I was looking for __eou__ __eot__

59) I have no idea what you ' re talking about __eou__ __eot__

60) how do I do that ? __eou__ __eot__

61) yeah , that 's what I was looking for : http : // rafb.net/paste/ __eou__ __eot__

62) thanks , I 'll give it a shot : ) __eou__ __eot__

63) yeah , that 's what I was looking for : - ) __eou__ __eot__

64) thanks , I 'll look into that . __eou__ __eot__

65) what do you mean ? __eou__ __eot__

66) thanks , I 'll check it out __eou__ __eot__

67) yeah , that 's what I 'm trying to do : ) __eou__ __eot__

68) thanks , I 'll take a look at it . __eou__ __eot__

69) yeah , that 's what I was looking for : http : // bugzilla.ubuntu.com/ __eou__ __eot__

70) yeah , that 's what I was looking for . __eou__ __eot__

71) thanks , I 'll give it a try __eou__ __eot__

72) yeah , that 's what I was looking for : http : // rafb.net/paste __eou__ __eot__

73) yeah , that 's what I was looking for , thanks __eou__ __eot__

74) thanks , I 'll look into it . __eou__ __eot__

75) I do n't know how to do that , but I 'm not sure what the problem is __eou__ __eot__

76) thanks , I 'll take a look at that . __eou__ __eot__

77) is there a way to fix it ? __eou__ __eot__

78) thanks , I 'll take a look at that . I 'll check it out . __eou__ __eot__

79) that ' s what I was looking for : http : // www.sysresccd.org/ __eou__ __eot__

80) I do n't know how to do that , but I 'm not sure what you mean . __eou__ __eot__

81) thanks , I 'll give it a shot . I 'll try it out . __eou__ __eot__

82) I ca n't seem to get it to work : ( __eou__ __eot__

83) I have no idea what you ' re talking about . __eou__ __eot__

84) yeah , that 's what I was looking for , thanks . __eou__ __eot__

85) yeah , that 's what I 'm trying to do __eou__ __eot__

86) thanks , I 'll give it a try . __eou__ __eot__

87) yeah , that 's what I was looking for : http : // cdimage.ubuntu.com __eou__ __eot__

88) thanks , I 'll take a look at it __eou__ __eot__

89) thanks , I 'll try that . __eou__ __eot__

90) thanks , I 'll take a look __eou__ __eot__

91) thanks , I 'll give it a shot . I 'll look into that . __eou__ __eot__

92) I have no idea what you ' re talking about , but I 'm not sure . __eou__ __eot__

93) thanks , I 'll give it a shot . I 'll look into it . __eou__ __eot__

94) I ' m not sure , but I 'm not sure what you ' re talking about __eou__ __eot__

95) yeah , that 's what I 'm trying to do . __eou__ __eot__

96) I do n't think that 's what I 'm looking for __eou__ __eot__

97) I do n't know how to do that , but I 'm not sure . __eou__ __eot__

98) thanks , I 'll take a look at that . I 'll look into it . __eou__ __eot__

99) yeah , that 's what I was looking for ... __eou__ __eot__

100) I do n't think that 's what I 'm looking for . __eou__ __eot__

101) thanks , I 'll take a look at that . I 'll look into that . __eou__ __eot__

102) that ' s what I was looking for : http : // rafb.net/paste/ __eou__ __eot__

103) thanks , I 'll give it a shot . I 'll try that . __eou__ __eot__

104) what do you mean by that ? __eou__ __eot__

105) yeah , that 's what I was looking for . thanks for the help . __eou__ __eot__

106) thanks , I 'll take a look at that . I 'll check it out __eou__ __eot__

107) thanks , I 'll give it a go . __eou__ __eot__

108) thanks , I 'll take a look at that __eou__ __eot__

109) I do n't know how to do that , but I 'm not sure __eou__ __eot__

110) that ' s what I was looking for : http : // rafb.net/paste __eou__ __eot__

111) yeah , that 's what I was looking for . thanks for the help __eou__ __eot__

112) thanks , I 'll take a look . __eou__ __eot__

113) that ' s what I thought , but I do n't know how to fix it __eou__ __eot__

114) thanks , I 'll look into it __eou__ __eot__

115) thanks , I 'll give it a shot . I 'll try it out __eou__ __eot__

116) yeah , that 's what I was looking for , but I 'm not sure __eou__ __eot__

117) yeah , that 's what I 've been looking for __eou__ __eot__

118) yeah , that 's what I was looking for ! __eou__ __eot__

119) yeah , that 's what I was looking for . thanks __eou__ __eot__

120) I ' m trying to figure out how to get it to work : ) __eou__ __eot__

121) thanks , I 'll give it a shot ... __eou__ __eot__

122) that 's what I was looking for __eou__ __eot__

123) thanks , I 'll look into that __eou__ __eot__

124) thanks , I 'll check that out __eou__ __eot__

125) I 'm trying to figure out how to get it to work . __eou__ __eot__

126) thanks , I 'll try that __eou__ __eot__

127) I ca n't seem to get it to work . __eou__ __eot__

128) I 'm trying to figure out how to get it to work __eou__ __eot__

129) I do n't know how to do that . __eou__ __eot__

130) thanks , I 'll give it a go __eou__ __eot__

131) I do n't think that 's what I 'm looking for ... __eou__ __eot__

132) thanks , I 'll take a look at it now __eou__ __eot__

133) thanks , I 'll check it out now . __eou__ __eot__

134) I do n't know how to do that __eou__ __eot__

135) I ca n't seem to get it to work __eou__ __eot__

136) that 's what I was looking for . __eou__ __eot__

137) thanks , I 'll take a look at that ... __eou__ __eot__

138) I ' m not sure what you ' re talking about __eou__ __eot__

139) thanks , I 'll check it out ... __eou__ __eot__

140) thanks , I 'll take a look at it ... __eou__ __eot__

141) I ' ll give it a try , thanks . __eou__ __eot__

142) thanks , I 'll give it a try ... __eou__ __eot__

143) I ' m trying to figure out how to get it to work __eou__ __eot__

144) I ' ll take a look at that , thanks . __eou__ __eot__

145) I 'll check it out , thanks __eou__ __eot__

146) yeah , that 's what I was thinking . __eou__ __eot__

147) I ' ll check it out , thanks __eou__ __eot__

148) I ' ll check it out , thanks . __eou__ __eot__

149) I ' ll take a look at that , thanks __eou__ __eot__

150) thanks , I 'll look into that ... __eou__ __eot__

151) I ' ll give it a try , thanks __eou__ __eot__

152) yeah , that 's what I was thinking __eou__ __eot__

153) yeah , that 's what I was thinking of __eou__ __eot__

154) I do n't know what it is . __eou__ __eot__

155) thanks , I 'll look into it ... __eou__ __eot__

156) I ca n't seem to find it __eou__ __eot__

157) thanks , I 'll give it a look __eou__ __eot__

158) that 's what I was looking for ... __eou__ __eot__

159) thanks , I 'll try it . __eou__ __eot__

160) thanks , I 'll check it out now __eou__ __eot__

161) I 'll check it out . __eou__ __eot__

162) thanks , I 'll try that ... __eou__ __eot__

163) yeah , that 's what I thought __eou__ __eot__

164) thanks , I 'll look at it __eou__ __eot__

165) I do n't think so . __eou__ __eot__

166) thanks , I 'll try it __eou__ __eot__

167) not that I know of __eou__ __eot__

168) thanks , I 'll look at that __eou__ __eot__

169) I ' ll give it a shot __eou__ __eot__

170) thank you , I 'll try that __eou__ __eot__

171) I do n't think so __eou__ __eot__

172) I 'll try that , thanks __eou__ __eot__

173) I ca n't find it . __eou__ __eot__

174) thanks I 'll try that __eou__ __eot__

175) I 'll check it out __eou__ __eot__

176) I 'll try that . __eou__ __eot__

177) I ca n't find it __eou__ __eot__

178) thanks : ) __eou__ __eot__

179) I have no idea __eou__ __eot__

180) ok , thanks __eou__ __eot__

181) I do n't know __eou__ __eot__

182) I 'll try that __eou__ __eot__

183) huh ? __eou__ __eot__

184) why ? __eou__ __eot__

185) yes __eou__ __eot__

186) thanks __eou__ __eot__

187) no __eou__ __eot__

188) yeah __eou__ __eot__

189) nope __eou__ __eot__

190) ok __eou__ __eot__

- First of all, we clearly see that we always get generic responses.

- We also see that even though the responses are generic, they can differ a lot in terms of meaning from one to another. For example we have "I don't know", "yes", "no" and "thanks" in that response list. This clearly indicates that if we ask to generate many responses with a large beam size, the decoder simply ignores the context and is just a simple language model.

Frequency of responses

Here we present the number of unique response our model generated with a greedy search (beam size of 1)

- Sorted, cumulative sum of the frequency of responses:

We lower-cased all responses in order to make the comparison as good as possible.Gold truth

HRED w/o attention

HRED w/ attention

HRED w/ attention, lower-casing and down-sampling

Total number of responses

72,762

72,762

72,762

72,762

Total number of unique responses

69,279

35,979

25,657

41,679

The x-axis is the index of unique responses. The responses of each model were sorted based on their frequency being before assigned an index.

The y-axis is the cumulative frequency of the responses.

We can see that the actual true responses have a lot of unique responses since the curve is almost the identity line. On the other hand, the three sets of experiments have a lot of duplicate responses:

- HRED without attention generated roughly 35,000 unique responses with many responses having high frequencies.

- Surprisingly HRED with attention only generated around 25,000 unique responses. This may be because the encoder is not considered important enough and the attention mechanism helps the decoder to ignore it. This could be because the decoder is trained with "teacher forcing": at each step during training, the previous true token is fed into the decoder, regardless of what it predicted before. At test time however, since we don't know the true response to a context, the decoder is fed its previous prediction, leading to the propagation of mistakes over time.

- Eventually, after removing generic turns, the model produces more unique responses (~40,000), showing that our third experiment actually did what was expected.

Human Evaluation

We present the results of a human evaluation of 200 randomly chosen samples, with each sample having been reviewed by 3 researchers. A sample is a context along with 5 responses as described earlier.